Research Initiatives

Bandit Learning: Analysis and Applications

Multi-armed Bandits (MAB) have been extensively used to model sequential decision problems with uncertain rewards, such as clinical trials, search engines, online advertising, and notification systems. In these applications, there is a strong motivation to enhance user experience by personalization for users and taking care of their specific demands, and thereby increase revenue with improved user experience. The model of ‘‘contextual bandits” seeks to do so by proposing a MAB model for learning how to act optimally based on contexts (features) of users and arms.

While contextual bandit model has been usefully applied in many areas, it is subject to two major limitations.

First, it neglects the phenomenon of ‘‘reneging” that is common in real-world applications. Reneging here refers to the behavior of users cutting ties with the learner after an unsatisfactory experience, and desisting from any future interactions. This is also referred to as ‘‘churn”, ‘‘disengagement”, ‘‘abandonment”, or ‘‘unsubscribing”. Reneging is common in real-world applications. For instance, in clinical trials, a patient dissatisfied with the effectiveness of a treatment quits all further trials.

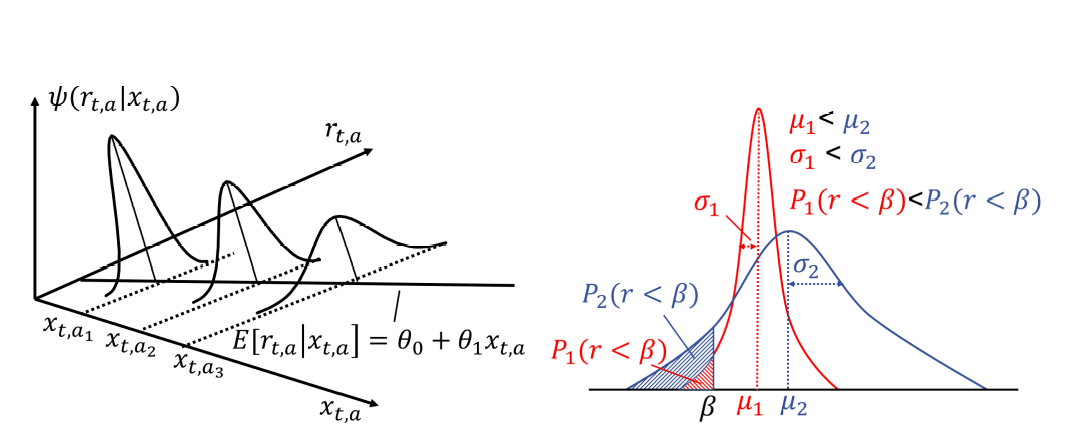

Second, previous studies have usually assumed that rewards are generated from an underlying reward distribution that is homoscedastic, i.e., its variance is independent of contexts. Unfortunately, this model is invalid due to the presence of “heteroscedasticity” in many real-world datasets, and learning algorithms based on it may be improvable. Examples abound in financial applications such as portfolio selection for hedge funds.

To address the above issues, we propose a novel model of contextual bandits that addresses the challenges arising from reneging risk and heteroscedasticity. We develop a UCB-type policy, called HR-UCB, that is proved to achieve a sublinear regret bound with high probability.

|

Selected publications:

Ping-Chun Hsieh, Xi Liu, Anirban Bhattacharya, and P. R. Kumar, ”Stay With Me: Lifetime Maximization Through Heteroscedastic Linear Bandits with Reneging,” International Conference on Machine Learning (ICML), 2019

QoE-Optimal Scheduling For Video Delivery in Wireless Networks

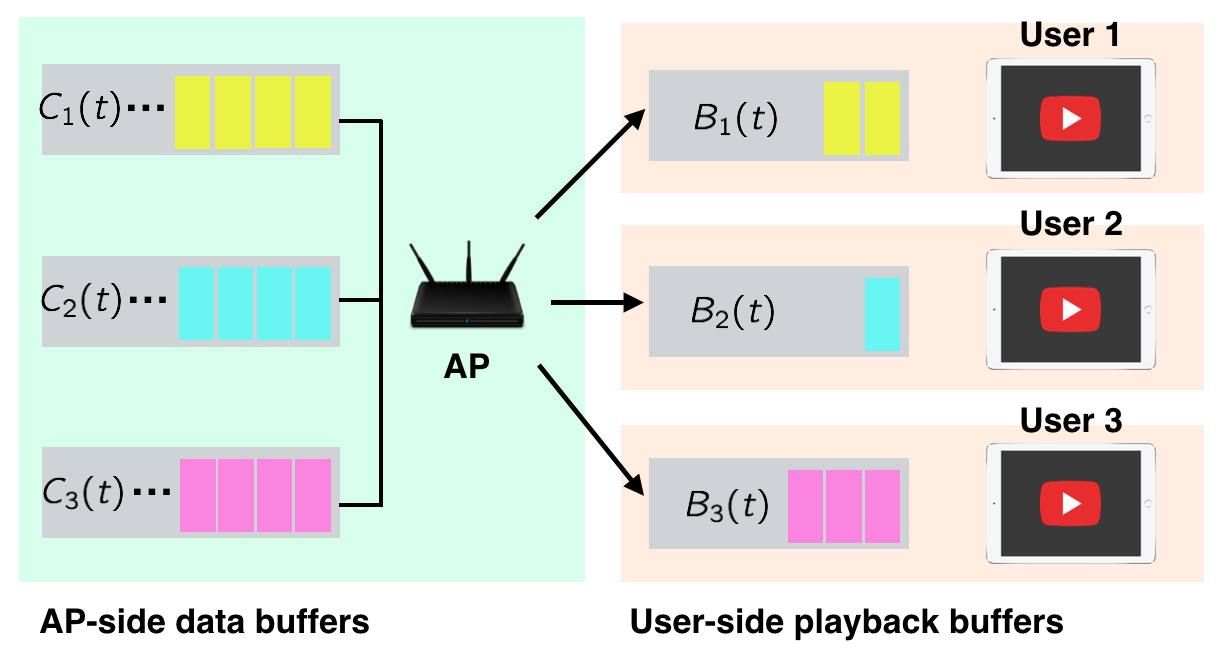

Video data traffic is projected to account for more than three fourths of total mobile traffic by the year 2021. Besides mainstream mobile devices, a large amount of new video-based IoT devices, such as smart TVs and smart headsets for virtual reality, are accelerating the increase of wireless video services. From the perspective of wireless service providers, wireless network algorithms are conventionally designed to meet the performance requirements of a general wireless network, such as throughput and average delay. However, these performance metrics fail to directly characterize real user experience in enjoying video service.

To quantify quality of experience (QoE), various research efforts have been devoted to exploring potential QoE factors by constructing analytical models as well as performing user-centric experimental evaluation. Among the various parameters, it has been shown that video interruption is the dominating factor of QoE for video streaming applications. Therefore, to connect QoE and wireless network algorithms, this research addresses scheduling design for minimizing video interruption experienced by the users. Specifically, we provide an analytical framework to characterize the capacity region for QoE and propose QoE-optimal scheduling policies that achieve the whole capacity region. We study both long-term and short-term QoE by applying diffusion approximation in the heavy-traffic regime and propose QoE-optimal scheduling over both unreliable channels and general fading channels.

|

Selected publications:

Ping-Chun Hsieh and I-Hong Hou, " Heavy-Traffic Analysis of QoE Optimality for On-Demand Video Streams Over Fading Channels," IEEE/ACM Transactions on Networking, vol. 26, no. 4, Aug. 2018, pp. 1768 – 1781.

I-Hong Hou and Ping-Chun Hsieh, " The Capacity of QoE for Wireless Networks with Unreliable Transmissions," Queueing Systems: Theory and Applications (QUESTA), vol. 87, no. 1-2, Oct. 2017, pp. 131-159.

Ultra-Low Latency Wireless Networks: Protocols and Testbed

Protocol Design:

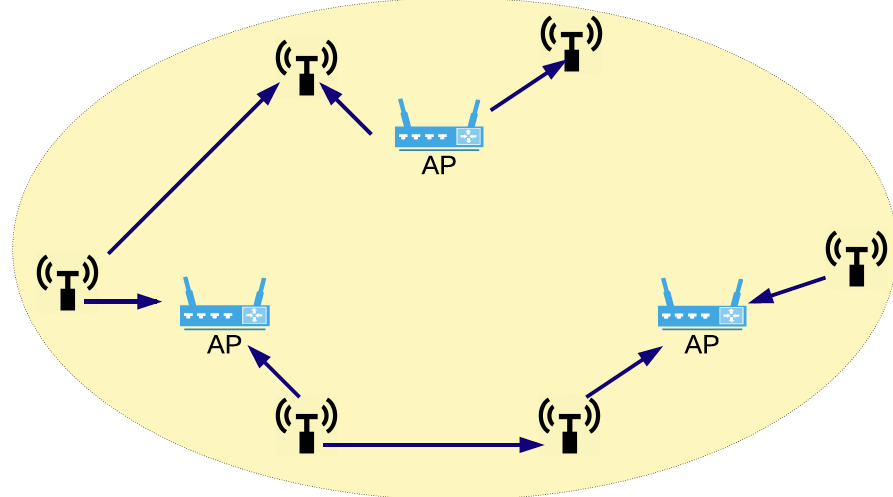

Industrial IoT applications rely heavily on reliable low-latency communication between various sensors and actuators. This can be fulfilled by incorporating real-time wireless networks with strict per-packet deadlines as well as ultra-low packet loss ratios. The performance of a real-time wireless network is usually measured by timely-throughput, i.e. the time average of the amount of data delivered by their deadlines. While there have been many promising centralized network algorithms for wireless access points (AP) with provably good timely-throughput guarantees, they might not be practical in certain settings. For example, in a wireless network with uplink traffic, an AP needs to continually update network states through polling, which can incur signi cant signalling overhead. Moreover, for better coverage or higher network density, these devices might be served by multiple collocated APs, which require additional coordination among them. Therefore, it is of great importance to design an efficient decentralized algorithm for real-time wireless networks.

To address this, we propose a fully-decentralized algorithm that achieves optimal timely-throughput for real-time wireless ad hoc networks by leveraging two widely-used functions: carrier sensing and backoff timers. Different from the conventional approach, the proposed algorithm utilizes a collision-free backo scheme to enforce the transmission priority of different wireless links. This design completely avoids the capacity loss due to collision with quantifiably small backoff overhead. Therefore, the proposed decentralized algorithm can achieve the same timely-throughput performance as the centralized ones.

|

Testbed Development:

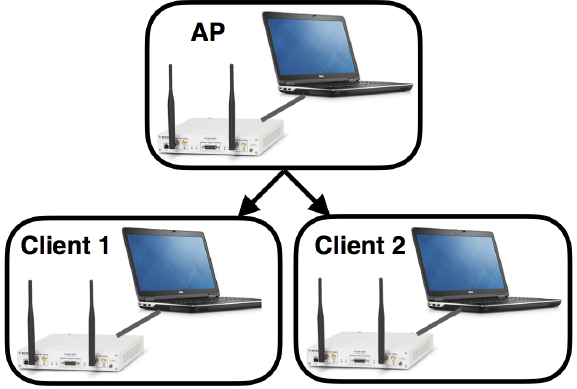

Strict latency requirement is currently one of the most critical challenges for many real-time IoT applications, such as virtual reality (VR) and industrial IoT. They require a round-trip latency as low as one millisecond to provide seamless user experience and reliable real-time control. However, the existing wireless networks cannot provide such stringent latency guarantees. For example, the round-trip latency of LTE is estimated to be at least 20 milliseconds, including the transmission time, scheduling overhead, and hardware processing delay. For Wi-Fi networks, due to the nature of random access, the round-trip time could vary from several milliseconds to hundreds of milliseconds depending on the trac load.

To address this, we develop an ultra-low-latency wireless testbed that is capable of supporting real-time wireless network algorithms.

Through careful architectural design, the proposed software-dened wireless testbed can achieve a per-packet latency below one millisecond for real-time IoT applications.

We implemented real-time wireless scheduling algorithms on our testbed and showed that the total throughput is close to the maximum achievable link throughput with single-digit millisecond per-packet deadlines.

|

Selected publications:

Ping-Chun Hsieh and I-Hong Hou, ”A Decentralized Medium Access Protocol for Real-Time Wireless Ad Hoc Networks With Unreliable Transmissions,” IEEE International Conference on Distributed Computing Systems (ICDCS), 2018.

Simon Yau, Ping-Chun Hsieh, Rajarshi Bhattacharyya, Kartic Bhargav, Srinivas Shakkottai, I-Hong Hou, and P. R. Kumar, ”PULS: Processor-Supported Ultra-Low Latency Scheduling,” ACM International Symposium on Mobile Ad Hoc Networking and Computing (MobiHoc), 2018

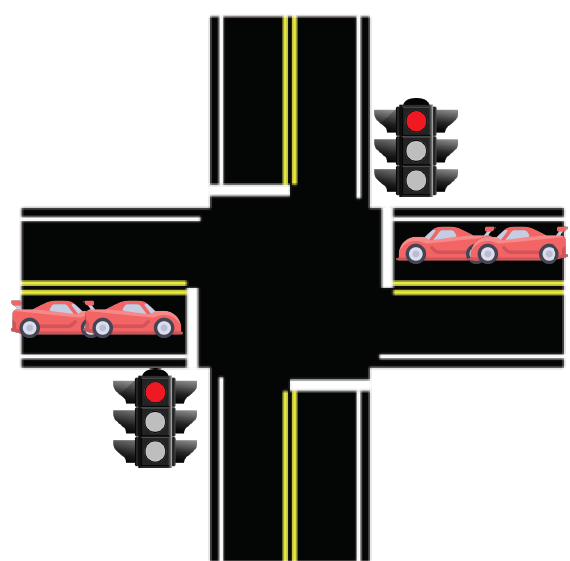

Scheduling for Networked Transportation Systems

In current transportation systems, most intersections follow xed-time-based scheduling policies to determine the traffic signals. However, fixed-time-based policies require complete knowledge of trac demands, which usually change with time and are difficult to predict. Recently, networked transportation systems have introduced a new dimension for designing intelligent traffic signal control algorithms by incorporating the emerging connected-vehicle technology. In networked transportation systems, roadside infrastructure can obtain accurate and real-time information about the number of vehicles waiting in each lane. This allows us to draw an analogy between transportation systems and communication networks: an intersection corresponds to a router, a lane corresponds to a queue, and a vehicle corresponds to a packet. In this analogy, the Max-Pressure policy for communication networks has been applied to transportation systems.

Despite this analogy, there are still essential differences between these two systems.

One major difference is switch-over delay due to the guard time before any traffic signal change. This switch-over delay can cause significant capacity loss and needs to be addressed in scheduling design.

During the transition from conventional transportation systems to fully-connected systems, the scheduling policies also need to coexist with the fixed-time-based policies.

Moreover, queue length estimation may not be perfect and therefore the scheduling policies need to be robust to estimation error.

In view of the above, we propose a distributed throughput-optimal scheduling policy that addresses all the above challenges.

|

Selected publications:

Ping-Chun Hsieh, Xi Liu, Jian Jiao, I-Hong Hou, Yunlong Zhang, and P.R. Kumar, ”Throughput-Optimal Scheduling For Multi-Hop Networked Transportation Systems With Switch-Over Delay,” ACM International Symposium on Mobile Ad Hoc Networking and Computing (MobiHoc), 2017.